We have addressed the problem of object detection and tracking for video surveillance systems. For this, we have proposed the integration of an Edge TPU based Coral Dev Board within a Hikvision video surveillance system. In this way, video analytics capabilities are strongly enhanced, e.g., perform object detection at 75 FPS using Tensorflow detection API and deep learning MobileNet SSD models. This preliminary evaluation is encouraging future developments that include multiple modules performing crowd density monitoring, facial recognition, people counting, behaviour analysis and detection or vehicle monitoring.

THE PROPOSED SYSTEM

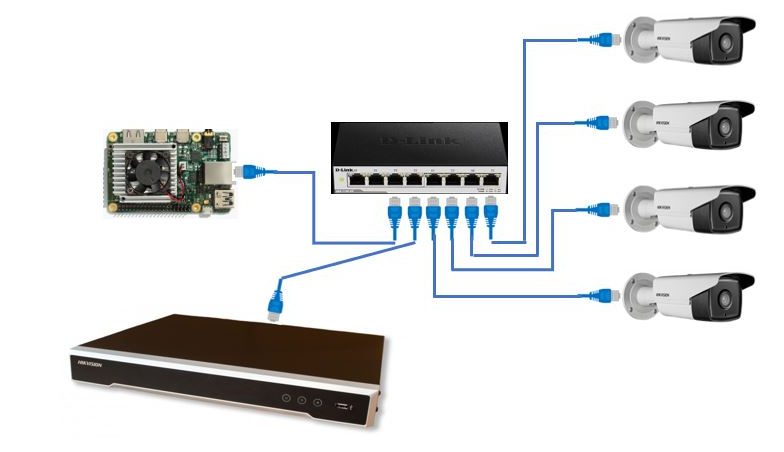

The components of the experimental setup are presented in fig. 3. They are briefly described below along with the justification for including the Google Edge TPU based “Coral” Development Board (and not other embedded Machine Learning (ML) accelerators) in a video surveillance system.

Fig. 3. The System Overview.

A. The Hardware

- Hikvision DS-7608NI-K2-8P, an 8 Channel Network Video Recorder (NVR), with 8 built-in PoE ports, can support eight IP cameras up to 8MP, supporting H.265/H.264/MPEG4 video formats (less storage and bandwidth) having 2 spaces for HDD that can hold a maximum capacity of 20TB [11].

- Four Hikvision DS-2CD2T32-I5 IP Outdoor Bullet Cameras capable of shooting 30 frames per second at full HD (up to 3-megapixel, 2048 x 153 resolution) and providing clear night-time images at up to 50 meters due to EXIR technology. These features are making it suitable for monitoring larger open areas [12].

- D-Link DGS-1100-08, an 8-Port Gigabit PoE Smart Managed Switch providing 8 x 10/100/1000BASE-T ports, PoE budget 64W [13].

- Machine Learning Accelerator Google Edge TPU (Coral Dev Board) having the following most important tech specs: NXP i.MX 8M SoC (quad Cortex-A53, Cortex-M4F) CPU, Integrated GC7000 Lite Graphics GPU, Google Edge TPU coprocessor as and 1 GB LPDDR4 RAM [14].

Although many high-speed ML embedded solutions are recently available on the market [15], e.g. Movidius Neural Compute Stick provided by Intel or Tegra SoC development platform NVIDIA Jetson Nano, the Edge TPU coprocessor presents certain advantages over competitors:

- it is very efficient, been capable of performing 4 trillion operations per second (TOPS) using just 0.5W for each TOPS.

- the other components of the SoC can be used for traditional image and video processing (the GPU) or to talk to other sensors like temperature sensor, ambient light sensor etc. (the Cortex-M4F core).

- built-in support for Wi-Fi and Bluetooth.

Moreover, according to a NVIDIA study [16], for the particular case of object detection, Google Edge TPU Dev Board outperforms the competition (tab. 1).

| Model | Application | Framework | NVIDIA Jetson Nano | Raspberry Pi 3 | Raspberry Pi 3 + Intel Neural Compute Stick 2 | Google Edge TPU Dev Board – Coral |

| SSD Mobilenet-V2

(300×300) |

Object Detection | TensorFlow | 39 FPS | 1 FPS | 11 FPS | 48 FPS |

Tab. 1. A comparison of ML accelerator platforms for the object detection task.

B. The Software

- TensorFlow Lite including Object Detection API. This framework facilitates low latency inference on mobile/embedded devices along with a small binary size. Moreover, just TensorFlow Lite models can be compiled to run on the Edge TPU. The second component, the Object Detection API, enable us to define, train and deploy object detection models.

- Pre-compiled models for image classification, object detection and on-device retraining (last fully-connected layer removed), as depicted in tab. 2.

| Image classification | Object detection | On-device retraining |

| CAT | CAT, DUCK, DOG | PRETRAINED MODEL |

| EfficientNet-EdgeTpu

Dataset: ImageNet Recognizes 1,000 types of objects |

MobileNet SSD v1

Dataset: COCO Detects the location of 90 types objects |

MobileNet v1 embedding extractor

Last fully-connected layer removed |

| MobileNet V1

Datasets: ImageNet Recognizes 1,000 types of objects |

MobileNet SSD v2

Dataset: COCO Detects the location of 90 types objects |

MobileNet v1 with L2-norm

Includes an L2-normalization layer, the last fully-connected layer executes on the CPU to enable retraining. |

| MobileNet V2

Datasets: ImageNet, iNat insects, iNat plants, iNat birds |

MobileNet SSD v2

Dataset: Open Images v4 Detects the location of human faces |

|

| Inception V2, V3, V4

Dataset: ImageNet |

Tab. 2. Pre-compiled models available for Edge TPU.

- OpenCV 4.1.1 (last currently available version), mainly for reading, writing and displaying video streams.

- Python 3.7, virtualenv and virtualenvwrapper for code design and project management

The experimental part aimed, on one hand, to assess the performance contribution brought by TPU coprocessor over the classical embedded ARM application processors. This comparison has been done using the “Town Centre Dataset” processed using SSD v1 with MobileNet for running the Tensorflow Object Detection API. The Edge TPU appeared to be 30 times faster than the ARM CPU, providing an average inference time of 10ms – see below.

CPU vs TPU benchmark using “Town Centre” dataset.

The second part of the experiment involved the usage of the Coral Dev Board in processing live video stream provided by NVR+IP surveillance cameras. The shown results (fig. 5) are obtained using MobileNet SSD v2 pre-compiled model. It behaved better, in term of detection accuracy, than the MobileNet SSD v1. As we had point out previously, the model was pre-trained using the COCO dataset [19] and is able to detect in real time the location of 90 different objects.

Coral Dev Board integrated in a video surveillance system (just detections over 40% are displayed).